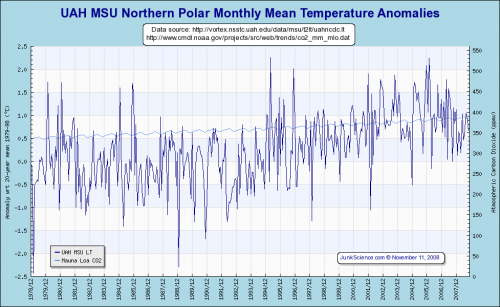

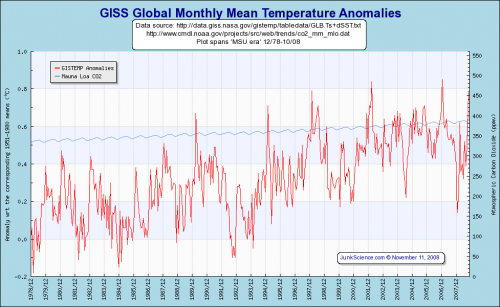

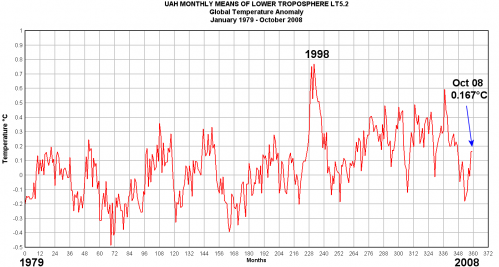

By accident, I have been drawn into a discussion surrounding a fairly innocent mistake made by NASA’s GISS in their October global temperature numbers. It began for me when I compared the October GISS and UAH satellite numbers for October, and saw an incredible diversion. For years these two data sets have shown a growing gap, but by tiny increments. But in October they really went in opposite directions. I used this occasion to call on the climate community to make a legitimate effort at validating and reconciling the GISS and satellite data sets.

Within a day of my making this post, several folks started noticing some oddities in the GISS October data, and eventually the hypothesis emerged that the high number was the result of reusing September numbers for certain locations in the October data set. Oh, OK. A fairly innocent and probably understandable mistake, and far more minor than the more systematic error a similar group of skeptics, (particularly Steve McIntyre, the man whose name the GISS cannot speak) found in the GISS data set a while back. The only amazing thing to me was not the mistake, but the fact that there were laymen out there on their own time who figured out the error so quickly after the data release. I wish there were a team of folks following me around, fixing material errors in my analysis before I ran too far with it.

So Gavin Schmidt of NASA comes out a day or two later and says, yep, they screwed up. End of story, right? Except Dr. Schmidt chose his blog post about the error to lash out at skeptics. This is so utterly human — in the light of day, most will admit it is a bad idea to lash out at your detractors in the same instant you admit they caught you in an error (however minor). But it is such a human need to try to recover and sooth one’s own ego at exactly this same time. And thus we get Gavin Schmidt’s post on RealClimate.com, which I would like to highlight a bit below.

He begins with a couple of paragraphs on the error itself. I will skip these, but you are welcome to check them out at the original. Nothing about the error seems in any way outside the category of “mistakes happen.” Had the post ended with something like “Many thanks to the volunteers who so quickly helped us find this problem,” I would not even be posting. But, as you can guess, this is not how it ends.

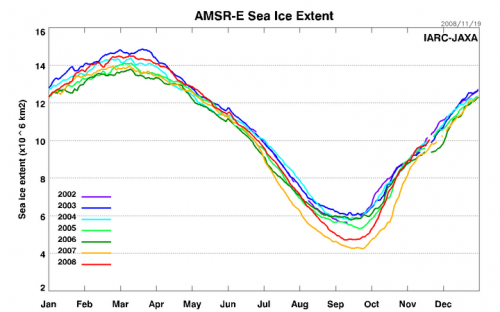

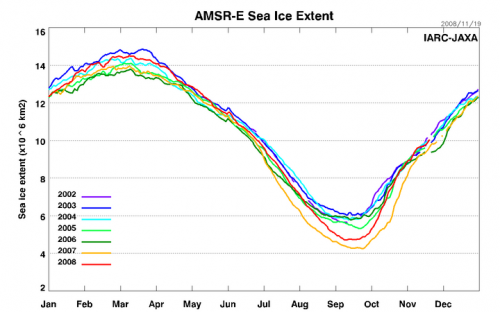

It’s clearly true that the more eyes there are looking, the faster errors get noticed and fixed. The cottage industry that has sprung up to examine the daily sea ice numbers or the monthly analyses of surface and satellite temperatures, has certainly increased the number of eyes and that is generally for the good. Whether it’s a discovery of an odd shiftin the annual cycle in the UAH MSU-LT data, or this flub in the GHCN data, or the USHCN/GHCN merge issue last year, the extra attention has led to improvements in many products. Nothing of any consequence has changed in terms of our understanding of climate change, but a few more i’s have been dotted and t’s crossed.

Uh, OK, but it is a bit unfair to characterize the “cottage industry” looking over Hansen’s and Schmidt’s shoulders as only working out at the third decimal place. Skeptics have pointed out what they consider to be fundamental issues in some of their analytical approaches, including their methods for compensating statistically for biases and discontinuities in measurement data the GISS rolls up into a global temperature anomaly. A fairly large body of amateur and professional work exists questioning the NOAA and GISS methodologies which often result in manual adjustments to the raw data larger in magnitude than the underlying warming signal tyring to be measured. I personally think there is a good case to be made that the GISS approach is not sufficient to handle this low signal to noise data, and that the GISS has descended in to “see no evil, hear no evil” mode in ignoring the station survey approach being led by Anthony Watt. Just because Schmidt does not agree doesn’t mean that the cause of climate science is not being advanced.

The bottom line, as I pointed out in my original post, is that the GISS anomaly and the satellite-measured anomaly are steadily diverging. Given some of the inherent biases and problems of surface temperature measurement, and NASA’s commitment to space technology as well as its traditional GISS metric, its amazing to me that Schmidt and Hansen are effectively punting instead of doing any serious work to reconcile the two metrics. So it is not surprising that into this vacuum left by Schmidt rush others, including us lowly amateurs.

By the way, this is the second time in about a year when the GISS has admitted an error in their data set, but petulently refused to mention the name of the person who helped them find it.

But unlike in other fields of citizen-science (astronomy or phenology spring to mind), the motivation for the temperature observers is heavily weighted towards wanting to find something wrong. As we discussed last year, there is a strong yearning among some to want to wake up tomorrow and find that the globe hasn’t been warming, that the sea ice hasn’t melted, that the glaciers have not receded and that indeed, CO2is not a greenhouse gas. Thus when mistakes occur (and with science being a human endeavour, they always will) the exuberance of the response can be breathtaking – and quite telling.

I am going to make an admission here that Dr. Schmidt very clear thinks is evil: Yes, I want to wake up tomorrow to proof that the climate is not changing catastrophically. I desperately hope Schmidt is overestimating future anthropogenic global warming. Here is something to consider. Take two different positions:

- I hope global warming theory is correct and the world faces stark tradeoffs between environmental devastation and continued economic growth and modern prosperity

- I hope global warming theory is over-stated and that these tradeoffs are not as stark.

Which is more moral? Why do I have to apologize for being in camp #2? Why isn’t it equally “telling” that Dr. Schmidt apparently puts himself in camp #1.

Of course, we skeptics would say the same of Schmidt. As much as we like to find a cooler number, we believe he wants to find a warmer number. Right or wrong, most of us see a pattern in the fact that the GISS seems to constantly find ways to adjust the numbers to show a larger historic warming, but require a nudge from outsiders to recognize when their numbers are too high. The fairest way to put it is that one group expects to see lower numbers and so tends to put more scrutiny on the high numbers, and the other does the opposite.

Really, I don’t think that Dr. Schmidt is a very good student of the history of science when he argues that this is somehow unique to or an aberration in modern climate science. Science has often depended on rivalries to ensure that skepticism is applied to both positive and negative results of any experiment. From phlogistan to plate techtonics, from evolution to string theory, there is really nothing new in the dynamic he describes.

A few examples from the comments at Watt’s blog will suffice to give you a flavour of the conspiratorial thinking: “I believe they had two sets of data: One would be released if Republicans won, and another if Democrats won.”, “could this be a sneaky way to set up the BO presidency with an urgent need to regulate CO2?”, “There are a great many of us who will under no circumstance allow the oppression of government rule to pervade over our freedom—-PERIOD!!!!!!” (exclamation marks reduced enormously), “these people are blinded by their own bias”, “this sort of scientific fraud”, “Climate science on the warmer side has degenerated to competitive lying”, etc… (To be fair, there were people who made sensible comments as well).

Dr. Schmidt, I am a pretty smart person. I have lots of little diplomas on my wall with technical degrees from Ivy League universities. And you know what – I am sometimes blinded by my own biases. I consider myself a better thinker, a better scientist, and a better decision-maker because I recognize that fact. The only person who I would worry about being biased is the one who swears that he is not.

By the way, I thought the little game of mining the comments section of Internet blogs to discredit the proprietor went out of vogue years ago, or at least has been relegated to the more extreme political blogs like Kos or LGF. Do you really think I could not spend about 12 seconds poking around environmentally-oriented web sites and find stuff just as unfair, extreme, or poorly thought out?

The amount of simply made up stuff is also impressive – the GISS press release declaring the October the ‘warmest ever’? Imaginary (GISS only puts out press releases on the temperature analysis at the end of the year). The headlines trumpeting this result? Non-existent. One clearly sees the relief that finally the grand conspiracy has been rumbled, that the mainstream media will get it’s comeuppance, and that surely now, the powers that be will listen to those voices that had been crying in the wilderness.

I am not quite sure what he is referring to here. I will repeat what I wrote. I said “The media generally uses the GISS data, so expect stories in the next day or so trumpeting ‘Hottest October Ever.'” I leave it to readers to decide if they find my supposition unwarranted. However, I encourage the reader to consider the 556,000 Google results, many media stories, that come up in a search for the words “hottest month ever.” Also, while the GISS may not issue monthly press releases for this type of thing, the NOAA and British Met Office clearly do, and James Hansen has made many verbal statements of this sort in the past.

By the way, keep in mind that that Dr. Schmidt likes to play Clinton-like games with words. I recall one episode last year when he said that climate models did not use the temperature station data, so they cannot be tainted with any biases found in the stations. Literally true, I guess, because the the models use gridded cell data. However, this gridded cell data is built up, using a series of correction and smoothing algorithms that many find suspect, from the station data. Keep this in mind when parsing Dr. Schmidt.

Alas! none of this will come to pass. In this case, someone’s programming error will be fixed and nothing will change except for the reporting of a single month’s anomaly. No heads will roll, no congressional investigations will be launched, no politicians (with one possible exception) will take note. This will undoubtedly be disappointing to many, but they should comfort themselves with the thought that the chances of this error happening again has now been diminished. Which is good, right?

I’m narrowly fine with the outcome. Certainly no heads should roll over a minor data error. I’m not certain no one like Watt or McIntyre suggested such a thing. However, the GISS should be embarrassed that they have not addressed and been more open about the issues in their grid cell correction/smoothing algorithms, and really owe us an explanation why no one there is even trying to reconcile the growing differences with satellite data.

In contrast to this molehill, there is an excellent story about how the scientific community really deals with serious mismatches between theory, models and data. That piece concerns the ‘ocean cooling’ story that was all the rage a year or two ago. An initial analysisof a new data source (the Argo float network) had revealed a dramatic short term cooling of the oceans over only 3 years. The problem was that this didn’t match the sea level data, nor theoretical expectations. Nonetheless, the paper was published (somewhat undermining claims that the peer-review system is irretrievably biased) to great acclaim in sections of the blogosphere, and to more muted puzzlement elsewhere. With the community’s attention focused on this issue, it wasn’t however long before problemsturned up in the Argo floats themselves, but also in some of the other measurement devices – particularly XBTs. It took a couple of years for these things to fully work themselves out, but the most recent analysesshow far fewer of the artifacts that had plagued the ocean heat content analyses in the past. A classic example in fact, of science moving forward on the back of apparent mismatches. Unfortunately, the resolution ended up favoring the models over the initial data reports, and so the whole story is horribly disappointing to some.

OK, fine, I have no problem with this. However, and I am sure that Schmidt would deny this to his grave, but he is FAR more supportive of open inspection of measurement sources that disagree with his hypothesis (e.g. Argo, UAH) than he is willing to tolerate scrutiny of his methods. Heck, until last year, he wouldn’t even release most of his algorithms and code for his grid cell analysis that goes into the GISS metric, despite the fact he is a government employee and the work is paid for with public funds. If he is so confident, I would love to see him throw open the whole GISS measurement process to an outside audit. We would ask the UAH and RSS guys to do the same. Here is my prediction, and if I am wrong I will apologize to Dr. Schmidt, but I am almost positive that while the UAH folks would say yes, the GISS would say no. The result, as he says, would likely be telling.

Which brings me to my last point, the role of models. It is clear that many of the temperature watchers are doing so in order to show that the IPCC-class models are wrong in their projections. However, the direct approach of downloading those models, running them and looking for flaws is clearly either too onerous or too boring. Even downloading the output (from here or here) is eschewed in favour of firing off Freedom of Information Act requests for data already publicly available – very odd. For another example, despite a few comments about the lack of sufficient comments in the GISS ModelE code (a complaint I also often make), I am unaware of anyone actually independently finding any errors in the publicly available Feb 2004 version (and I know there are a few). Instead, the anti-model crowd focuses on the minor issues that crop up every now and again in real-time data processing hoping that, by proxy, they’ll find a problem with the models.

I say good luck to them. They’ll need it.

Red Alert! Red Alert! Up to this point, the article was just petulant and bombastic. But here, Schmidt becomes outright dangerous, suggesting a scientific process that is utterly without merit. But I want to take some time on this, so I will pull this out into a second post I will label part 2.