I cannot recommend Matt Ridley’s new article strongly enough. It covers a lot of ground be here are a few highlights.

Ridley argues that science generally works (in a manner entirely parallel to how well-functioning commercial markets work) because there are generally incentives to challenge hypotheses. I would add that if anything, the incentives tend to be balanced more towards challenging conventional wisdom. If someone puts a stake in the ground and says that A is true, then there is a lot more money and prestige awarded to someone who can prove A is not true than for the thirteenth person to confirm that A is indeed true.

This process breaks, however when political pressures undermine this natural market of ideas and switch the incentives for challenging hypotheses into punishment.

Lysenkoism, a pseudo-biological theory that plants (and people) could be trained to change their heritable natures, helped starve millions and yet persisted for decades in the Soviet Union, reaching its zenith under Nikita Khrushchev. The theory that dietary fat causes obesity and heart disease, based on a couple of terrible studies in the 1950s, became unchallenged orthodoxy and is only now fading slowly.

What these two ideas have in common is that they had political support, which enabled them to monopolise debate. Scientists are just as prone as anybody else to “confirmation bias”, the tendency we all have to seek evidence that supports our favoured hypothesis and dismiss evidence that contradicts it—as if we were counsel for the defence. It’s tosh that scientists always try to disprove their own theories, as they sometimes claim, and nor should they. But they do try to disprove each other’s. Science has always been decentralised, so Professor Smith challenges Professor Jones’s claims, and that’s what keeps science honest.

What went wrong with Lysenko and dietary fat was that in each case a monopoly was established. Lysenko’s opponents were imprisoned or killed. Nina Teicholz’s book The Big Fat Surprise shows in devastating detail how opponents of Ancel Keys’s dietary fat hypothesis were starved of grants and frozen out of the debate by an intolerant consensus backed by vested interests, echoed and amplified by a docile press….

This is precisely what has happened with the climate debate and it is at risk of damaging the whole reputation of science.

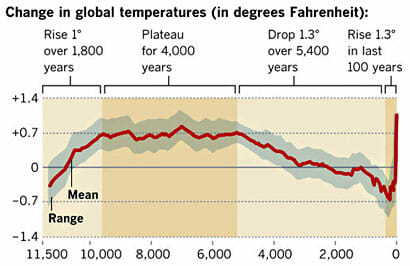

This is one example of the consequences

Look what happened to a butterfly ecologist named Camille Parmesan when she published a paper on “ Climate and Species Range” that blamed climate change for threatening the Edith checkerspot butterfly with extinction in California by driving its range northward. The paper was cited more than 500 times, she was invited to speak at the White House and she was asked to contribute to the IPCC’s third assessment report.

Unfortunately, a distinguished ecologist called Jim Steele found fault with her conclusion: there had been more local extinctions in the southern part of the butterfly’s range due to urban development than in the north, so only the statistical averages moved north, not the butterflies. There was no correlated local change in temperature anyway, and the butterflies have since recovered throughout their range. When Steele asked Parmesan for her data, she refused. Parmesan’s paper continues to be cited as evidence of climate change. Steele meanwhile is derided as a “denier”. No wonder a highly sceptical ecologist I know is very reluctant to break cover.

He also goes on to lament something that is very familiar to me — there is a strong argument for the lukewarmer position, but the media will not even achnowledge it exists. Either you are a full-on believer or you are a denier.

The IPCC actually admits the possibility of lukewarming within its consensus, because it gives a range of possible future temperatures: it thinks the world will be between about 1.5 and four degrees warmer on average by the end of the century. That’s a huge range, from marginally beneficial to terrifyingly harmful, so it is hardly a consensus of danger, and if you look at the “probability density functions” of climate sensitivity, they always cluster towards the lower end.

What is more, in the small print describing the assumptions of the “representative concentration pathways”, it admits that the top of the range will only be reached if sensitivity to carbon dioxide is high (which is doubtful); if world population growth re-accelerates (which is unlikely); if carbon dioxide absorption by the oceans slows down (which is improbable); and if the world economy goes in a very odd direction, giving up gas but increasing coal use tenfold (which is implausible).

But the commentators ignore all these caveats and babble on about warming of “up to” four degrees (or even more), then castigate as a “denier” anybody who says, as I do, the lower end of the scale looks much more likely given the actual data. This is a deliberate tactic. Following what the psychologist Philip Tetlock called the “psychology of taboo”, there has been a systematic and thorough campaign to rule out the middle ground as heretical: not just wrong, but mistaken, immoral and beyond the pale. That’s what the word denier with its deliberate connotations of Holocaust denial is intended to do. For reasons I do not fully understand, journalists have been shamefully happy to go along with this fundamentally religious project.

The whole thing reads like a lukewarmer manifesto. Honestly, Ridley writes about 1000% better than I do, so rather than my trying to summarize it, go read it.