I have often written warming about the difficulty of modeling complex systems. My mechanical engineering degree was focused on the behavior and modeling of dynamic systems. Since then, I have spent years doing financial, business, and economic modeling. And all that experienced has taught me humility, as well as given me a good knowledge of where modelers tend to cheat.

Al Gore has argued that we should trust long-term models, because Wall Street has used such models successfully for years (I am not sure he has been using this argument lately, lol). I was immediately skeptical of this statement. First, Wall Street almost never makes 100-year bets based on models (they may be investing in 30-year securities, but the bets they are making are much shorter term). Second, my understanding of Wall Street history is that lower Manhattan is littered with the carcasses of traders who bankrupted themselves following the hot model of the moment. It is ever so easy to create a correlation model that seems to back-cast well. But no one has ever created one that holds up well going forward.

A reader sent me this article about the Gaussian copula, apparently the algorithm that underlay the correlation models Wall Streeters used to assess mortgage security and derivative risk.

Wall Streeters have the exact same problem that climate modelers have. There is a single output variable they both care about (security price for traders, global temperature for modelers). This variable’s value changes in a staggeringly complex system full of millions of variables with various levels of cross-correlation. The modelers challenge is to look at the historical data, and to try to tease out correlation factors between their output variable and all the other input variables in an environment where they are all changing.

The problem is compounded because some of the input variables move on really long cycles, and some move on short cycles. Some of these move in such long cycles that we may not even recognize the cycle at all. In the end, this tripped up the financial modelers — all of their models derived correlation factors from a long and relatively unbroken period of home price appreciation. Thus, when this cycle started to change, all the models fell apart.

Li’s copula function was used to price hundreds of billions of dollars’ worth of CDOs filled with mortgages. And because the copula function used CDS prices to calculate correlation, it was forced to confine itself to looking at the period of time when those credit default swaps had been in existence: less than a decade, a period when house prices soared. Naturally, default correlations were very low in those years. But when the mortgage boom ended abruptly and home values started falling across the country, correlations soared.

I never criticize people for trying to do an analysis with the data they have. If they have only 10 years of data, that’s as far as they can run the analysis. However, it is then important that they recognize that their analysis is based on data that may be way too short to measure longer term trends.

As is typical when models go wrong, early problems in the model did not cause users to revisit their assumptions:

His method was adopted by everybody from bond investors and Wall Street banks to ratings agencies and regulators. And it became so deeply entrenched—and was making people so much money—that warnings about its limitations were largely ignored.

Then the model fell apart. Cracks started appearing early on, when financial markets began behaving in ways that users of Li’s formula hadn’t expected. The cracks became full-fledged canyons in 2008—when ruptures in the financial system’s foundation swallowed up trillions of dollars and put the survival of the global banking system in serious peril.

A couple of lessons I draw out for climate models:

- Limited data availability can limit measurement of long-term cycles. This is particularly true in climate, where cycles can last hundreds and even thousands of years, but good reliable data on world temperatures is only available for our 30 years and any data at all for about 150 years. Interestingly, there is good evidence that many of the symptoms we attribute to man-made global warming are actually part of climate cycles that go back long before man burned fossil fuels in earnest. For example, sea levels have been rising since the last ice age, and glaciers have been retreating since the late 18th century.

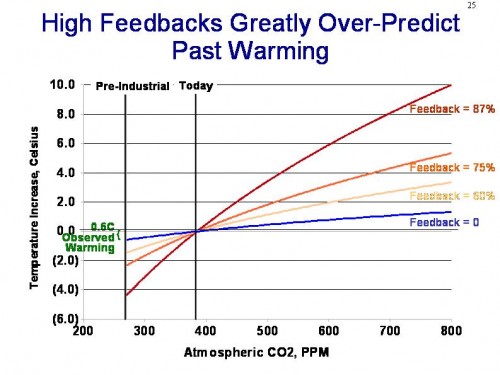

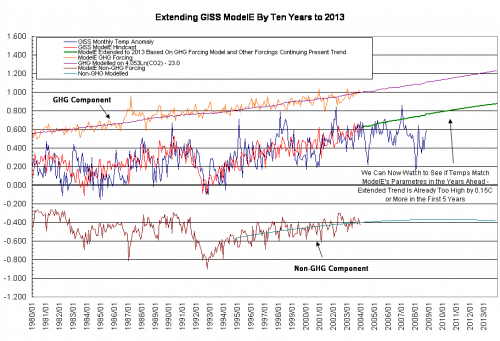

- The fact that models hindcast well has absolutely no predictive power as to whether they will forecast well

- Trying to paper over deviations between model forecasts and actuals, as climate scientists have been doing for the last 10 years, without revisiting the basic assumptions of the model can be fatal.

A Final Irony

Do you like irony? In the last couple of months, I have been discovering I like it less than I thought. But here is a bit of irony for you anyway. The first paragraph of Obama’s new budget read like this:

This crisis is neither the result of a normal turn of the business cycle nor an accident of history, we arrived at this point as a result of an era of profound irresponsibility that engulfed both private and public institutions from some of our largest companies’ executive suites to the seats of power in Washington, D.C.

As people start to deconstruct last year’s financial crisis, most of them are coming to the conclusion that the #1 bit of “irresponsibility” was the blind investment of trillions of dollars based on solely on the output of correlation-based computer models, and continuing to do so even after cracks appeared in the models.

The irony? Obama’s budget includes nearly $700 billion in new taxes (via a cap-and-trade system) based solely on … correlation-based computer climate models that predict rapidly rising temperatures from CO2. Climate models in which a number of cracks have appeared, but which are being ignored.

Postscript: When I used this comparison the other day, a friend of mine fired back that the Wall Street guys were just MBA’s, but the climate guys were “scientists” and thus presumably less likely to err. I responded that I didn’t know if one group or the other was more capable (though I do know that Wall Street employs a hell of a lot of top-notch PhD’s). But I did know that the financial consequences for Wall Street traders having the wrong model was severe, while the impact on climate modelers of being wrong was about zero. So, from an incentives standpoint, I know who I would more likely bet on to try to get it right.