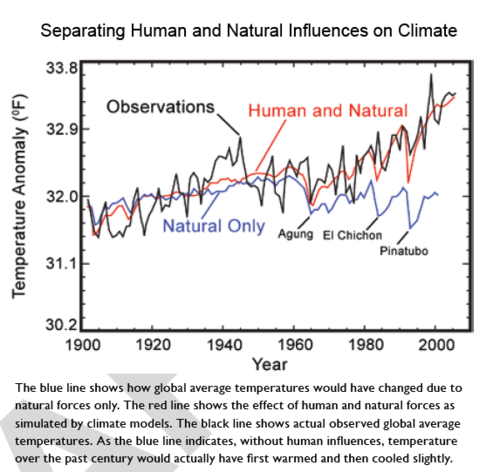

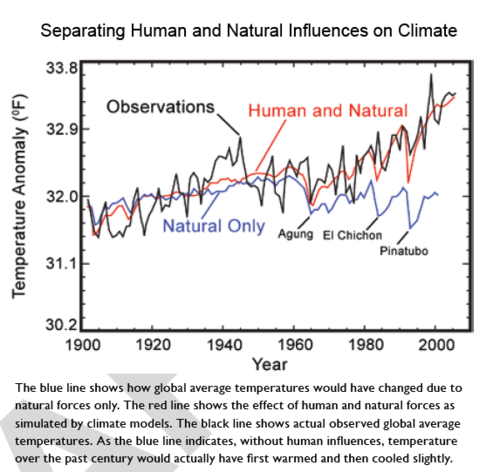

I found the chart below in the chapter Global Climate Change of the NOAA/NASA CCSP climate change report. (I discuss this report more here). I thought it was illustrative of some interesting issues:

The Perfect Backcast

What they are doing is what I call "backcasting," that is, taking a predictive model and running it backwards to see how well it preforms against historical data. This is a perfectly normal thing to do.

And wow, what a fit. I don’t have the data to do any statistical tests, but just by eye, the red model output line does an amazing job at predicting history. I have done a lot of modeling and forecasting in my life. However, I have never, ever backcast any model and gotten results this good. I mean it is absolutely amazing.

Of course, one can come up with many models that backcast perfectly but have zero predictive power.

A recent item of this ilk maintains that the results of the last game played at home by the NFL’s Washington Redskins (a football team based in the national capital, Washington, D.C.) before the U.S. presidential elections has accurately foretold the winner of the last fifteen of those political contests, going back to 1944. If the Redskins win their last home game before the election, the party that occupies the White House continues to hold it; if the Redskins lose that last home game, the challenging party’s candidate unseats the incumbent president. While we don’t presume there is anything more than a random correlation between these factors, it is the case that the pattern held true even longer than claimed, stretching back over seventeen presidential elections since 1936.

And in fact, our confidence in the climate models based on their near-perfect back-casting should be tempered by the fact that when the models first were run backwards, they were terrible at predicting history. Only a sustained effort to tweak and adjust and plug them has resulted in this tight fit (we will return to the subject of plugging in a minute).

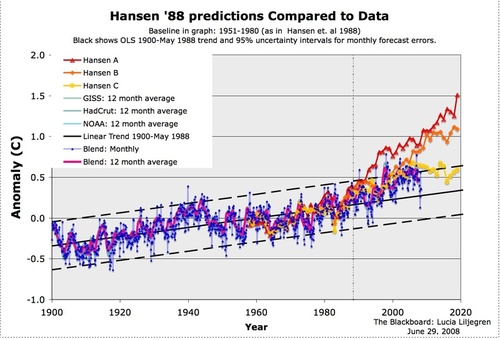

In fact, it is fairly easy to demonstrate that the models are far better at predicting history than they are at predicting the future. Like the Washington Redskins algorithm, which failed in 2004 after backcasting so well, climate models have done a terrible job in predicting the first 10-20 years of the future. This is the reason that neither this nor any other global warming alarmist report every shows a chart grading how model forecasts have performed against actual data: Because their record has been terrible. After all, we have climate model forecasts data all the way back from the late 1980’s — surely 20+ years is enough to get a test of their performance.

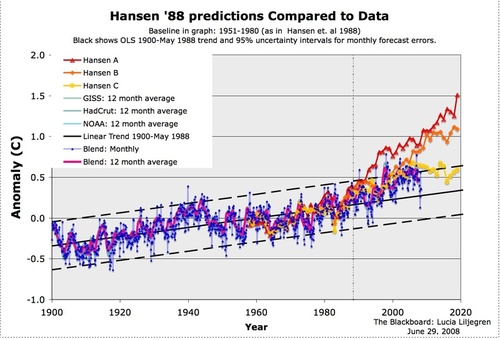

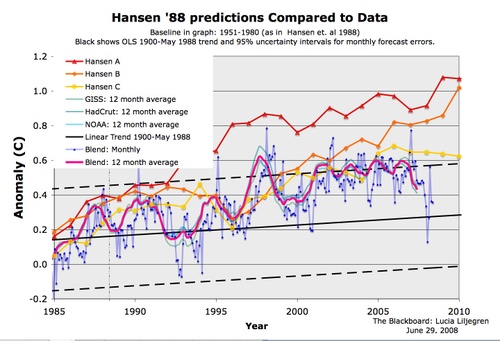

Below is the model forecasts James Hansen, whose fingerprints are all over this report, used before Congress in 1988 (in yellow, orange, and red), with a comparison to the actual temperature record (in blue). (source)

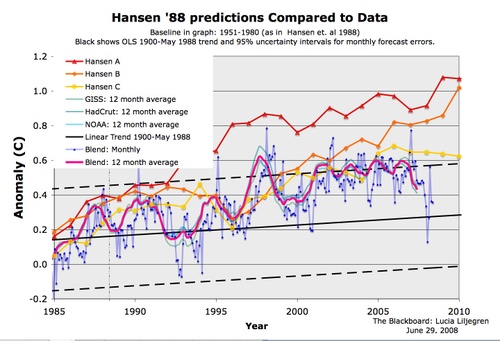

Here is the detail from the right side:

You can see the forecasts began diverging from reality even as early as 1985. By the way, don’t get too encouraged by the yellow line appearing to be fairly close — the Hansen C case in yellow was similar to the IPCC B1 case which hypothesizes strong international CO2 abatement programs which have not come about. Based on actual CO2 production, the world is tracking, from a CO2 standpoint, between the orange and red lines. However, temperature is no where near the predicted values.

So the climate models are perfect at predicting history, but begin diverging immediately as we move into the future. That is probably why the IPCC resets its forecasts every 5 years, so they can hit the reset button on this divergence. As an interesting parallel, temperature measurements of history with trees have very similar divergence issues when carried into the future.

What the Hell happened in 1955?

Looking again at the backcast chart at the top of this article, peek at the blue line. This is what the models predict to have been the world temperature without man-made forcings. The blue line is supposed to represent the climate absent man. But here is the question I have been asking ever since I first started studying global warming, and no one has been able to answer: What changed in the Earth’s climate in 1955? Because, as you can see, climate forecasters are telling us the world would have reversed a strong natural warming trend and started cooling substantially in 1955 if it had not been for anthropogenic effects.

This has always been an issue with man-made global warming theory. Climate scientists admit the world warmed from 1800 through 1955, and that most of this warming was natural. But somehow, this natural force driving warming switched off, conveniently in the exact same year when anthropogenic effects supposedly took hold. A skeptical mind might ask why current warming is not just the same natural trend as warming up to 1955, particularly since no one can say with any confidence why the world warmed up to 1955 and why this warming switched off and reversed after that.

Well, lets see if we can figure it out. The sun, despite constant efforts by alarmists to portray it is climactically meaningless, is a pretty powerful force. Did the sun change in 1955? (click to enlarge)

Well, it does not look like the sun turned off. In fact, it appears that just the opposite was happening — the sun hit a peak around 1955 and has remained at this elevated level throughout the current supposedly anthropogenic period.

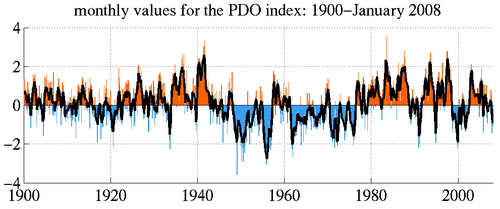

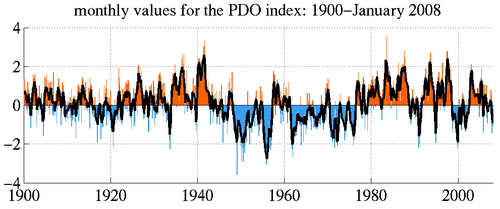

OK, well maybe it was the Pacific Decadal Oscillation? The PDO goes through warm and cold phases, and its shifts can have large effects on temperatures in the Northern Hemisphere.

Hmm, doesn’t seem to be the PDO. The PDO turned downwards 10 years before 1955. And besides, if the line turned down in 1955 due to the PDO, it should have turned back up in the 1980’s as the PDO went to its warm phase again.

So what is it that happened in 1955. I can tell you: Nothing.

Let me digress for a minute, and explain an ugly modeling and forecasting concept called a "plug". It is not unusual that when one is building a model based on certain inputs (say, a financial model built from interest rates and housing starts or whatever) that the net result, while seemingly logical, does not get to what one thinks the model should be saying. While few will ever admit it, I have been inside the modeling sausage factory for enough years that it is common to add plug figures to force a model to reach an answer one thinks it should be reaching — this is particularly common after back-casting a model.

I can’t prove it, any more than this report can prove the statement that man is responsible for most of the world’s warming in the last 50 years. But I am certain in my heart that the blue line in the backcasting chart is a plug. As I mentioned earlier, modelers had terrible success at first matching history with their forecasting models. In particular, because their models showed such high sensitivity of temperature to CO2 (this sensitivity has to be high to get catastrophic forecasts) they greatly over-predicted history.

Here is an example. The graph below shows the relationship between CO2 and temperature for a number of sensitivity levels (the shape of the curve was based on the IPCC formula and the process for creating this graph was described here).

The purple lines represent the IPCC forecasts from the fourth assessment, and when converted to Fahrenheit from Celsius approximately match the forecasts on page 28 of this report. The red and orange lines represent more drastic forecasts that have received serious consideration. This graph is itself a simple model, and we can actually backcast with it as well, looking at what these forecasts imply for temperature over the last 100-150 years, when CO2 has increased from 270 ppm to about 385 ppm.

The forecasts all begin at zero at the pre-industrial number of 270ppm. The green dotted line is the approximate concentration of CO2 today. The green 0.3-0.6C arrows show the reasonable range of CO2-induced warming to date. As one can see, the IPCC forecasts, when cast backwards, grossly overstate past warming. For example, the IPCC high case predicts that we should have see over 2C warming due to CO2 since pre-industrial times, not 0.3 or even 0.6C

Now, the modelers worked on this problem. One big tweak was to assign an improbably high cooling effect to sulfate aerosols. Since a lot of these aerosols were produced in the late 20th century, this reduced their backcasts closer to actuals. (I say improbably, because aerosols are short-lived and cover a very limited area of the globe. If they cover, say, only 10% of the globe, then their cooling effect must be 1C in their area of effect to have even a small 0.1C global average effect).

Even after these tweaks, the backcasts were still coming out too high. So, to make the forecasts work, they asked themselves, what would global temperatures have to have done without CO2 to make our models work? The answer is that if the world naturally were to have cooled in the latter half of the 20th century, then that cooling could offset over-prediction of temperatures in the models and produce the historic result. So that is what they did. Instead of starting with natural forcings we understand, and then trying to explain the rest (one, but only one, bit of which would be CO2), modelers start with the assumption that CO2 is driving temperatures at high sensitivities, and natural forcings are whatever they need to be to make the backcasts match history.

By the way, if you object to this portrayal, and I will admit I was not in the room to confirm that this is what the modelers were doing, you can do it very simply. Just tell me what substantial natural driver of climate, larger in impact that the sun or the PDO, reversed itself in 1955.

A final Irony

I could go on all day making observations on this chart, but I would be surprised if many readers have slogged it this far. So I will end with one irony. The climate modelers are all patting themselves on the back for their backcasts matching history so well. But the fact is that much of this historical temperature record is fraught with errors. Just as one example, measured temperatures went through several large up and down shifts in the 40’s and 50’s solely because ships were switching how they took sea surface temperatures (engine inlet sampling tends to yield higher temperatures than bucket sampling). Additionally, most surface temperature readings are taken in cities that have experienced rapid industrial growth, increasing urban heat biases in the measurements. In effect, they have plugged and tweaked their way to the wrong target numbers! Since the GISS and other measurement bodies are constantly revising past temperature numbers with new correction algorithms, it will be interesting to see if the climate models magically revise themselves and backcast perfectly to the new numbers as well.