This is really some crazy-bad science in a new study by Welch et al on Asian rice yields purporting to show that they will be reduced by warmer weather. This is an odd result on its face, given that rice yields have been increasing as the world has warmed over the last 50 years.

Now, it is possible that temperature-related drops in yields have been offset by even larger improvements in other areas that have increased yields, but one’s suspicion-meter is certainly triggered by the finding, especially since the press release on the study says that yields have already been cut 10-20% in some areas, flying in the face of broader yield data.

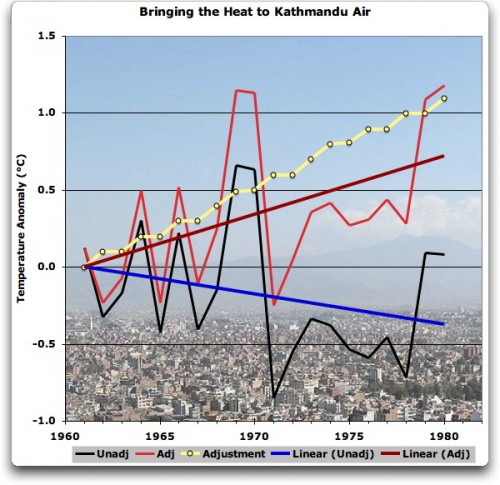

Willis Eschenbach dove into it, and found this amazing approach. How this passed peer-review muster is just further evidence as to how asymmetrical peer review is in climate (ie if you have the “right” findings, they will pass all kinds of slop)

First, it covers a very short time span. The longest farm yield datasets used are only six years long (1994-99). Almost a fifth of the datasets are three years or less, and the Chinese data (6% of the total data) only cover two years (1998-1999)….

But whichever dataset they used, they are comparing a two year series of yields against a twenty-six year trend. I’m sorry, but I don’t care what the results of that comparison might be. There is no way to compare a two-year dataset with anything but the temperature records from that area for those two years. This is especially true given the known problems with the ground-station data. And it is doubly true when one of the two years (1998) is a year with a large El Niño.

In fact, he goes on to point out that simultaneous to the two-year trend in China showing yields falling (I still can’t get over extrapolating from a 2 year farm yield trend) temperatures in China did very different things than their long-term averages might predict

For example, they give the trend for maximum temps in the winter (DecJanFeb) for the particular location in China (29.5N, 119.47E) as being 0.06°C per year, and the trend for spring (MarAprMay) as being 0.05°C per year (I get 0.05°/yr and 0.04°C/yr respectively, fairly close).

But from 1998 to 1999, the actual DJF change was +2.0°C, and the MAM change was minus 1.0°C (CRU TS Max Temperature dataset). As a result, they are comparing the Chinese results to a theoretical trend which has absolutely no relationship to what actually occurred on the ground.

Further, though Eschenbach only mentions it in passing, there likely is another large problem with the data. The researchers do not mention what temperature station they are using data from, but if past global warming study methodology is any guide, the station could be hundreds of miles away from the farms studied.