Cross-Posted from Coyoteblog

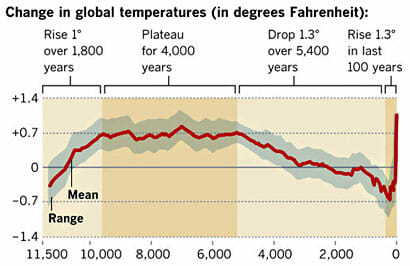

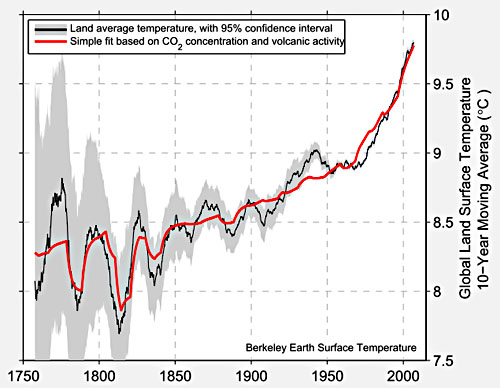

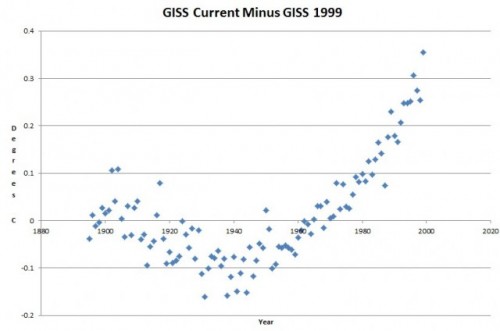

I won’t repeat the analysis, you need to see it here. Here is the chart in question:

My argument is that the smoothing and relatively low sampling intervals in the early data very likely mask variations similar to what we are seeing in the last 100 years — ie they greatly exaggerate the smoothness of history (also the grey range bands are self-evidently garbage, but that is another story).

Drum’s response was that “it was published in Science.” Apparently, this sort of appeal to authority is what passes for data analysis in the climate world.

Well, maybe I did not explain the issue well. So I found a political analysis that may help Kevin Drum see the problem. This is from an actual blog post by Dave Manuel (this seems to be such a common data analysis fallacy that I found an example on the first page of my first Google search). It is an analysis of average GDP growth by President. I don’t know this Dave Manuel guy and can’t comment on the data quality, but let’s assume the data is correct for a moment. Quoting from his post:

Here are the individual performances of each president since 1948:

1948-1952 (Harry S. Truman, Democrat), +4.82%

1953-1960 (Dwight D. Eisenhower, Republican), +3%

1961-1964 (John F. Kennedy / Lyndon B. Johnson, Democrat), +4.65%

1965-1968 (Lyndon B. Johnson, Democrat), +5.05%

1969-1972 (Richard Nixon, Republican), +3%

1973-1976 (Richard Nixon / Gerald Ford, Republican), +2.6%

1977-1980 (Jimmy Carter, Democrat), +3.25%

1981-1988 (Ronald Reagan, Republican), 3.4%

1989-1992 (George H. W. Bush, Republican), 2.17%

1993-2000 (Bill Clinton, Democrat), 3.88%

2001-2008 (George W. Bush, Republican), +2.09%

2009 (Barack Obama, Democrat), -2.6%

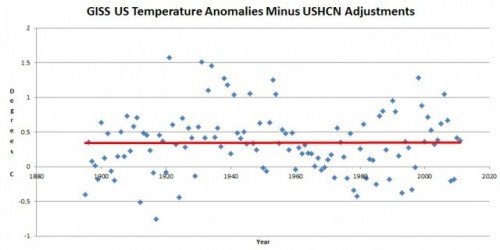

Let’s put this data in a chart:

Look, a hockey stick , right? Obama is the worst, right?

In fact there is a big problem with this analysis, even if the data is correct. And I bet Kevin Drum can get it right away, even though it is the exact same problem as on his climate chart.

The problem is that a single year of Obama’s is compared to four or eight years for other presidents. These earlier presidents may well have had individual down economic years – in fact, Reagan’s first year was almost certainly a down year for GDP. But that kind of volatility is masked because the data points for the other presidents represent much more time, effectively smoothing variability.

Now, this chart has a difference in sampling frequency of 4-8x between the previous presidents and Obama. This made a huge difference here, but it is a trivial difference compared to the 1 million times greater sampling frequency of modern temperature data vs. historical data obtained by looking at proxies (such as ice cores and tree rings). And, unlike this chart, the method of sampling is very different across time with temperature – thermometers today are far more reliable and linear measurement devices than trees or ice. In our GDP example, this problem roughly equates to trying to compare the GDP under Obama (with all the economic data we collate today) to, say, the economic growth rate under Henry the VIII. Or perhaps under Ramses II. If I showed that GDP growth in a single month under Obama was less than the average over 66 years under Ramses II, and tried to draw some conclusion from that, I think someone might challenge my analysis. Unless of course it appears in Science, then it must be beyond question.