A lot of skeptics’ websites are riled up about the EPA’s leadership decision not to forward comments by EPA staffer Alan Carlin on the Endangerment issue and global warming because these comments were not consistent with where the EPA wanted to go on this issue. I reprinted the key EPA email here, which I thought sounded a bit creepy, and some of the findings by the CEI which raised this issue.

However, I think skeptics are getting a bit carried away. Let’s try to avoid the exaggeration and hype of which we often accuse global warming alarmists. This decision does not reflect well on the EPA, but let’s make sure we understand what it was and was not:

- This was not a “study” in the sense we would normally use the word. These were comments submitted by an individual to a regulatory decision and/or a draft report. The authors claimed to only have 4 or 5 days to create these comments. To this extent, they are not dissimilar to the types of comments many of us submitted to the recently released climate change synthesis report (comments, by the way, which still have not been released though the final report is out — this in my mind is a bigger scandal than how Mr. Carlin’s comments were handled). Given this time frame, the comments are quite impressive, but nonetheless not a “study.”

- This was not an officially sanctioned study that was somehow suppressed. In other words, I have not seen anywhere that Mr. Carlin was assigned by the agency to produce a report on anthropogenic global warming. This does not however imply that what Mr. Carlin was doing was unauthorized. This is a very normal activity — staffers from various departments and background submitting comments on reports and proposed regulations. He was presumably responding to an internal call for comments by such and such date.

- I have had a number of folks write me saying that everyone is misunderstanding the key email — that it should be taken on its face — and read to mean that Mr. Carlin commented on issues outside of the scope of the study or based document he was commenting on. An example might be submitting comments saying man is not causing global warming to a study discussing whether warming causes hurricanes. However, his comments certainly seem relevant to Endangerment question — the background, action, and proposed finding the comments were aimed at is on the EPA website here. Note in particular the comments in Carlin’s paper were totally relevant and on point to the content of the technical support document linked on that page.

- The fourth email cited by the CEI, saying that Mr. Carlin should cease spending any more time on global warming, is impossible to analyze without more context. There are both sinister and perfectly harmless interpretations of such an email. For example, I could easily imagine an employee assigned to area Y who had a hobbyist interest in area X and loved to comment on area X being asked by his supervisor to go back and do his job in area Y. I have had situations like that in the departments I have run.

What does appear to have happened is that Mr. Carlin responded to a call for comments, submitted comments per the date and process required, and then had the organization refuse to forward those comments because they did not fit the storyline the EPA wanted to put together. This content-based rejection of his submission does appear to violate normal EPA rules and practices and, if not, certainly violates the standards we would want such supposedly science-based regulatory bodies to follow. But let’s not upgrade this category 2 hurricane to category 5 — this was not, as I understand it, an agency suppressing an official agency-initiated study.

I may be a cynical libertarian on this, but this strikes me more as a government issue than a global warming issue. Government bureaucracies love consensus, even when they have to impose it. I don’t think there is a single agency in Washington that has not done something similar — ie suppressed internal concerns and dissent when the word came down from on high what the answer was supposed to be on a certain question they were supposed to be “studying.”** This sucks, but its what we get when we build this big blundering bureaucracy to rule us.

Anyway, Anthony Watt is doing a great job staying on top of this issue. His latest post is here, and includes an updated version of Carlin’s comments. Whatever the background, Carlin’s document is well worth a read. I have mirrored the document here.

**Postscript: Here is something I have observed about certain people in both corporate and government beauracracies. I appologize, but I don’t really have the words for this and I don’t know the language of psychology. There is a certain type of person who comes to believe, really believe, their boss’s position on an issue. We often chalk this up from the outside to brown-nosing or an “Eddie Haskell” effect where people fake their beliefs, but I don’t think this is always true. I think there is some sort of human mental defense mechanism that people have a tendency to actually adopt (not just fake) the beliefs of those in power over them. Certainly some folks resist this, and there are some issues too big or fundamental for this to work, but for many folks their mind will reshape itself to the beaucracracy around it. It is why sometimes organizations cannot be fixed, and can only be blown up.

Update: The reasons skeptics react strongly to stuff like this is that there are just so many examples:

Over the coming days a curiously revealing event will be taking place in Copenhagen. Top of the agenda at a meeting of the Polar Bear Specialist Group (set up under the International Union for the Conservation of Nature/Species Survival Commission) will be the need to produce a suitably scary report on how polar bears are being threatened with extinction by man-made global warming….

Dr Mitchell Taylor has been researching the status and management of polar bears in Canada and around the Arctic Circle for 30 years, as both an academic and a government employee. More than once since 2006 he has made headlines by insisting that polar bear numbers, far from decreasing, are much higher than they were 30 years ago. Of the 19 different bear populations, almost all are increasing or at optimum levels, only two have for local reasons modestly declined.

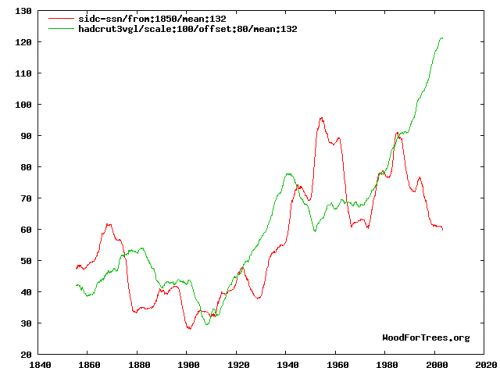

Dr Taylor agrees that the Arctic has been warming over the last 30 years. But he ascribes this not to rising levels of CO2 – as is dictated by the computer models of the UN’s Intergovernmental Panel on Climate Change and believed by his PBSG colleagues – but to currents bringing warm water into the Arctic from the Pacific and the effect of winds blowing in from the Bering Sea….

Dr Taylor had obtained funding to attend this week’s meeting of the PBSG, but this was voted down by its members because of his views on global warming. The chairman, Dr Andy Derocher, a former university pupil of Dr Taylor’s, frankly explained in an email (which I was not sent by Dr Taylor) that his rejection had nothing to do with his undoubted expertise on polar bears: “it was the position you’ve taken on global warming that brought opposition”.

Dr Taylor was told that his views running “counter to human-induced climate change are extremely unhelpful”. His signing of the Manhattan Declaration – a statement by 500 scientists that the causes of climate change are not CO2 but natural, such as changes in the radiation of the sun and ocean currents – was “inconsistent with the position taken by the PBSG”.