For several years, there was an absolute spate of lawsuits charging sudden acceleration of a motor vehicle — you probably saw such a story: Some person claims they hardly touched the accelerator and the car leaped ahead at enormous speed and crashed into the house or the dog or telephone pole or whatever. Many folks have been skeptical that cars were really subject to such positive feedback effects where small taps on the accelerator led to enormous speeds, particularly when almost all the plaintiffs in these cases turned out to be over 70 years old. It seemed that a rational society might consider other causes than unexplained positive feedback, but there was too much money on the line to do so.

Many of you know that I consider questions around positive feedback in the climate system to be the key issue in global warming, the one that separates a nuisance from a catastrophe. Is the Earth’s climate similar to most other complex, long-term stable natural systems in that it is dominated by negative feedback effects that tend to damp perturbations? Or is the Earth’s climate an exception to most other physical processes, is it in fact dominated by positive feedback effects that, like the sudden acceleration in grandma’s car, apparently rockets the car forward into the house with only the lightest tap of the accelerator?

I don’t really have any new data today on feedback, but I do have a new climate forecast from a leading alarmist that highlights the importance of the feedback question.

Dr. Joseph Romm of Climate Progress wrote the other day that he believes the mean temperature increase in the “consensus view” is around 15F from pre-industrial times to the year 2100. Mr. Romm is mainly writing, if I read him right, to say that critics are misreading what the consensus forecast is. Far be it for me to referee among the alarmists (though 15F is substantially higher than the IPCC report “consensus”). So I will take him at his word that 15F increase with a CO2 concentration of 860ppm is a good mean alarmist forecast for 2100.

I want to deconstruct the implications of this forecast a bit.

For simplicity, we often talk about temperature changes that result from a doubling in Co2 concentrations. The reason we do it this way is because the relationship between CO2 concentrations and temperature increases is not linear but logarithmic. Put simply, the temperature change from a CO2 concentration increase from 200 to 300ppm is different (in fact, larger) than the temperature change we might expect from a concentration increase of 600 to 700 ppm. But the temperature change from 200 to 400 ppm is about the same as the temperature change from 400 to 800 ppm, because each represents a doubling. This is utterly uncontroversial.

If we take the pre-industrial Co2 level as about 270ppm, the current CO2 level as 385ppm, and the 2100 Co2 level as 860 ppm, this means that we are about 43% through a first doubling of Co2 since pre-industrial times, and by 2100 we will have seen a full doubling (to 540ppm) plus about 60% of the way to a second doubling. For simplicity, then, we can say Romm expects 1.6 doublings of Co2 by 2100 as compared to pre-industrial times.

So, how much temperature increase should we see with a doubling of CO2? One might think this to be an incredibly controversial figure at the heart of the whole matter. But not totally. We can break the problem of temperature sensitivity to Co2 levels into two pieces – the expected first order impact, ahead of feedbacks, and then the result after second order effects and feedbacks.

What do we mean by first and second order effects? Well, imagine a golf ball in the bottom of a bowl. If we tap the ball, the first order effect is that it will head off at a constant velocity in the direction we tapped it. The second order effects are the gravity and friction and the shape of the bowl, which will cause the ball to reverse directions, roll back through the middle, etc., causing it to oscillate around until it eventually loses speed to friction and settles to rest approximately back in the middle of the bowl where it started.

It turns out the the first order effects of CO2 on world temperatures are relatively uncontroversial. The IPCC estimated that, before feedbacks, a doubling of CO2 would increase global temperatures by about 1.2C (2.2F). Alarmists and skeptics alike generally (but not universally) accept this number or one relatively close to it.

Applied to our increase from 270ppm pre-industrial to 860 ppm in 2100, which we said was about 1.6 doublings, this would imply a first order temperature increase of 3.5F from pre-industrial times to 2100 (actually, it would be a tad more than this, as I am interpolating a logarithmic function linearly, but it has no significant impact on our conclusions, and might increase the 3.5F estimate by a few tenths.) Again, recognize that this math and this outcome are fairly uncontroversial.

So the question is, how do we get from 3.5F to 15F? The answer, of course, is the second order effects or feedbacks. And this, just so we are all clear, IS controversial.

A quick primer on feedback. We talk of it being a secondary effect, but in fact it is a recursive process, such that there is a secondary, and a tertiary, etc. effects.

Lets imagine that there is a positive feedback that in the secondary effect increases an initial disturbance by 50%. This means that a force F now becomes F + 50%F. But the feedback also operates on the additional 50%F, such that the force is F+50%F+50%*50%F…. Etc, etc. in an infinite series. Fortunately, this series can be reduced such that the toal Gain =1/(1-f), where f is the feedback percentage in the first iteration. Note that f can and often is negative, such that the gain is actually less than 1. This means that the net feedbacks at work damp or reduce the initial input, like the bowl in our example that kept returning our ball to the center.

Well, we don’t actually know the feedback fraction Romm is assuming, but we can derive it. We know his gain must be 4.3 — in other words, he is saying that an initial impact of CO2 of 3.5F is multiplied 4.3x to a final net impact of 15. So if the gain is 4.3, the feedback fraction f must be about 77%.

Does this make any sense? My contention is that it does not. A 77% first order feedback for a complex system is extraordinarily high — not unprecedented, because nuclear fission is higher — but high enough that it defies nearly every intuition I have about dynamic systems. On this assumption rests literally the whole debate. It is simply amazing to me how little good work has been done on this question. The government is paying people millions of dollars to find out if global warming increases acne or hurts the sex life of toads, while this key question goes unanswered. (Here is Roy Spencer discussing why he thinks feedbacks have been overestimated to date, and a bit on feedback from Richard Lindzen).

But for those of you looking to get some sense of whether a 15F forecast makes sense, here are a couple of reality checks.

First, we have already experienced about .43 if a doubling of CO2 from pre-industrial times to today. The same relationships and feedbacks and sensitivities that are forecast forward have to exist backwards as well. A 15F forecast implies that we should have seen at least 4F of this increase by today. In fact, we have seen, at most, just 1F (and to attribute all of that to CO2, rather than, say, partially to the strong late 20th century solar cycle, is dangerous indeed). But even assuming all of the last century’s 1F temperature increase is due to CO2, we are way, way short of the 4F we might expect. Sure, there are issues with time delays and the possibility of some aerosol cooling to offset some of the warming, but none of these can even come close to closing a gap between 1F and 4F. So, for a 15F temperature increase to be a correct forecast, we have to believe that nature and climate will operate fundamentally different than they have over the last 100 years.

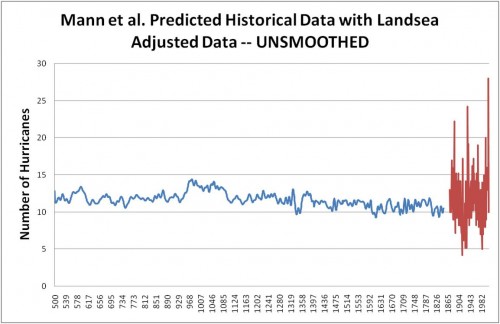

Second, alarmists have been peddling a second analysis, called the Mann hockey stick, which is so contradictory to these assumptions of strong positive feedback that it is amazing to me no one has called them on the carpet for it. In brief, Mann, in an effort to show that 20th century temperature increases are unprecedented and therefore more likely to be due to mankind, created an analysis quoted all over the place (particularly by Al Gore) that says that from the year 1000 to about 1850, the Earth’s temperature was incredibly, unbelievably stable. He shows that the Earth’s temperature trend in this 800 year period never moves more than a few tenths of a degree C. Even during the Maunder minimum, where we know the sun was unusually quiet, global temperatures were dead stable.

This is simply IMPOSSIBLE in a high-feedback environment. There is no way a system dominated by the very high levels of positive feedback assumed in Romm’s and other forecasts could possibly be so rock-stable in the face of large changes in external forcings (such as the output of the sun during the Maunder minimum). Every time Mann and others try to sell the hockey stick, they are putting a dagger in teh heart of high-positive-feedback driven forecasts (which is a category of forecasts that includes probably every single forecast you have seen in the media).

For a more complete explanation of these feedback issues, see my video here.