I have always been suspicious of climate models, in part because I spent some time in college trying to model chaotic dynamic systems, and in part because I have a substantial amount of experience with financial modeling. There are a number of common traps one can fall into when modeling any system, and it appears to me that climate modelers are falling into most of them.

So a while back (before I even created this site) I was suspicious of this chart from the IPCC. In this chart, the red is the “backcasting” of temperature history using climate models, the black line is the highly smoothed actuals, while the blue is a guess from the models as to what temperatures would have looked like without manmade forcings, particularly CO2.

As I wrote at the time:

I cannot prove this, but I am willing to make a bet based on my long, long history of modeling (computers, not fashion). My guess is that the blue band, representing climate without man-made effects, was not based on any real science but was instead a plug. In other words, they took their models and actual temperatures and then said “what would the climate without man have to look like for our models to be correct.” There are at least four reasons I strongly suspect this to be true:

- Every computer modeler in history has tried this trick to make their models of the future seem more credible. I don’t think the climate guys are immune.

- There is no way their models, with our current state of knowledge about the climate, match reality that well.

- The first time they ran their models vs. history, they did not match at all. This current close match is the result of a bunch of tweaking that has little impact on the model’s predictive ability but forces it to match history better. For example, early runs had the forecast run right up from the 1940 peak to temperatures way above what we see today.

- The blue line totally ignores any of our other understandings about the changing climate, including the changing intensity of the sun. It is conveniently exactly what is necessary to make the pink line match history. In fact, against all evidence, note the blue band falls over the century. This is because the models were pushing the temperature up faster than we have seen it rise historically, so the modelers needed a negative plug to make the numbers look nice.

As you can see, the blue band, supposedly sans mankind, shows a steadily declining temperature. This never made much sense to me, given that, almost however you measure it, solar activity over the last half of the decade was stronger than the first half, but they show the natural forcings to be exactly opposite from what we might expect from this chart of solar activity as measured by sunspots (red is smoothed sunspot numbers, green is Hadley CRUT3 temperature).

By the way, there is a bit of a story behind this chart. It was actually submitted by a commenter to this site of the more alarmist persuasion (without the PDO bands), to try to debunk the link between temperature and the sun (silly rabbit – the earth’ s temperature is not driven by the sun, but by parts per million changes in atmospheric gas concentrations!). While the sun still is not the only factor driving the mercilessly complex climate, clearly solar activity in red was higher in the latter half of the century when temperatures in green were rising. Which is at least as tight as the relation between CO2 and the same warming.

Anyway, why does any of this matter? Skeptics have argued for quite some time that climate models assume too high of a sensitivity of temperature to CO2 — in other words, while most of us agree that Co2 increases can affect temperatures somewhat, the models assume temperature to be very sensitive to CO2, in large part because the models assume that the world’s climate is dominated by positive feedback.

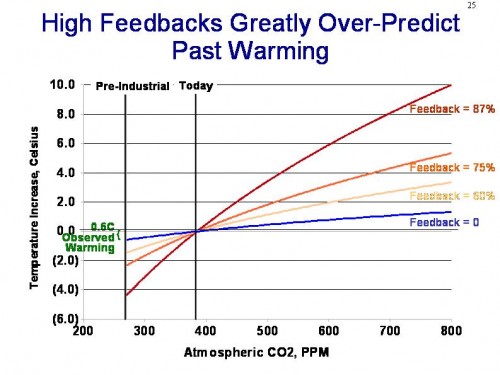

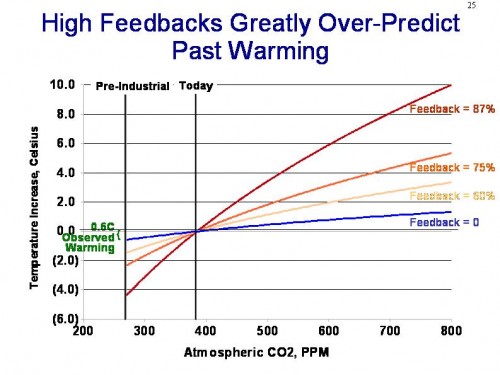

One way to demonstrate that these models may be exaggerated is to plot their predictions backwards. A relationship between Co2 and temperature that exists in the future should hold in the past, adjusting for time delays (in fact, the relationship should be more sensitive in the past, since sensitivity is a logarithmic diminishing-return curve). But projecting the modelled sensitivities backwards (with a 15-year lag) result in ridiculously high predicted historic temperature increases that we simply have never seen. I discuss this in some depth in my 10 minute video here, but the key chart is this one:

You can see the video to get a full explanation, but in short, models that include high net positive climate feedbacks have to produce historical warming numbers that far exceed measured results. Even if we assign every bit of 20th century warming to man-made causes, this still only implies 1C of warming over the next century.

So the only way to fix this is with what modelers call a plug. Create some new variable, in this case “the hypothetical temperature changes without manmade CO2,” and plug it in. By making this number very negative in the past, but flat to positive in the future, one can have a forecast that rises slowly in the past but rapidly in the future.

Now, I can’t prove that this is what was done. In fact, I am perfectly willing to believe that modelers can spin a plausible story with enough jargon to put off most layman, as to how they created this “non-man” line and why it has been decreasing over the last half of the century. I have a number of reasons to disbelieve any such posturing:

- The last IPCC report spent about a thousand pages on developing the the “with Co2” forecasts. They spent about half a page discussing the “without Co2” case. These is about zero scientific discussion of how this forecast is created, or what the key elements are that drive it

- The IPCC report freely admits their understanding of cooling factors is “low”

- The resulting forecasts is WAY to good. We will see this again in a moment. But with such a chaotic system, your first reaction to anyone who shows you a back-cast that nicely overlays history almost exactly should be “bullshit.” Its not possible, except with tuning and plugs

- The sun was almost undeniably stronger in the second half of the 20th century than the first half. So what is the countervailing factor that overcomes both the sun and CO2?

The IPCC does not really say what is making the blue line go down, it just goes down (because, as we can see now, it has to to make their hypothesis work). Today, the main answer to the question of what might be offsetting warming is “aerosols,” particularly sulfur and carbon compounds that are man-made pollutants (true pollutants) from burning fossil fuels. The hypothesis is that these aerosols reflect sunlight back to space and cool the earth (by the way, the blue line above in the IPCC report is explicitly only non-anthropogenic effects, so at the time it went down due to natural effects – the manmade aerosol thing is a newer straw to grasp).

But black carbon and aerosols have some properties that create some problems with this argument, once you dig into it. First, there are situations where they are as likely to warm as to cool. For example, one reason the Arctic has been melting faster in the summer of late is likely due to black carbon from Chinese coal plants that land on the ice and warm it faster.

The other issue with aerosols is that they disperse quickly. Co2 mixes fairly evenly worldwide and remains in the atmosphere for years. Many combustion aerosols only remain in the air for days, and so they tend to be concentrated regionally. Perhaps 10-20% of the earth’s surface might at any one time have a decent concentration of man-made aerosols. But for that to drive a, say, half degree cooling effect that offsets CO2 warming, that would mean that cooling in these aerosol-affected areas would have to be 2.5-5.0C in magnitude. If this were the case, we would see those colored global warming maps with cooling in industrial aerosol-rich areas and warming in the rest of the world, but we just don’t see that. In fact, the vast, vast majority of man-made aerosols can be found in the northern hemisphere, but it is the northern hemisphere that is warming much faster than the southern hemisphere. If aerosols were really offsetting half or more of the warming, we should see the opposite, with a toasty south and a cool north.

All of this is a long, long intro to a guest post on WUWT by Bill Illis. He digs into one of the major climate models, GISS model E, and looks at the back-casts from this model. What he finds mirrors a lot of what we discussed above:

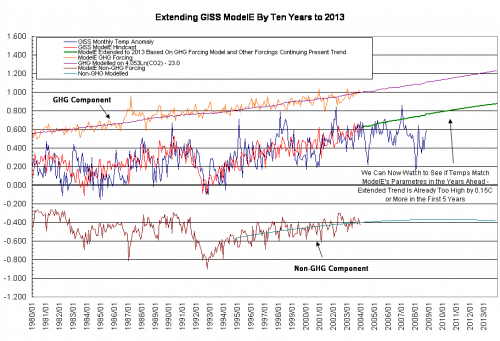

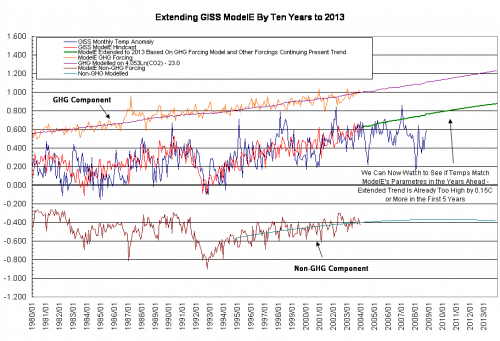

Blue is the GISS actual temperature measurement. Red is the model’s hind-cast of temperatures. You can see that they are remarkably, amazingly, staggeringly close. There are chaotic systems we have been modelling for hundreds of years (e.g. the economy) where we have never approached the accuracy this relative infant of a science seems to achieve.

That red forecasts in the middle is made up of a GHG component, shown in orange, plus a negative “everything else” component, shown in brown. Is this starting to seem familiar? Does the brown line smell suspiciously to anyone else like a “plug?” Here are some random thoughts inspired by this chart:

- As with any surface temperature measurement system, the GISS system is full of errors and biases and gaps. Some of these their proprietors would acknowledge, and such have been pointed out by outsiders. Never-the-less, the GISS metric is likely to have an error of at least a couple tenths of a degree. Which means the climate model here is perfectly fitting itself to data that isn’t even likely correct. It is fitting closer to the GISS temperature number than the GISS temperature number likely fits to the actual world temperature anomaly, if such a thing could be measured directly. Since the Hadley Center or the satellite guys at UAH and RSS get different temperature histories for the last 30-100 years, it is interesting that the GISS model exactly matches the GISS measurement but not these others. Does that make anyone suspicious? When the GISS makes yet another correction of its historical data, will the model move with it?

- As mentioned before, the sum total of time spent over the last 10 years trying to carefully assess the forcings from other natural and man-made effects and how they vary year-to-year is minuscule compared to the time spent looking at CO2. I don’t think we have enough knowledge to draw the Co2 line on this chart, but we CERTAINLY don’t have knowledge to draw the “all other” line (with monthly resolution, no less!).

- Looking back over history, it appears the model is never off by more than 0.4C in any month, and never goes more than about 10 months before re-intersecting the “actual” line. Does it bother anyone else that this level of precision is several times higher than the model has when run forward? Almost immediately, the model is more than 0.4C off, and goes years without intercepting reality.