Testimony of Roy W. Spencer before the

Senate Environment and Public Works Committee on 22 July 2008

A printable PDF of this testimony can be found here

I would like to thank Senator Boxer and members of the Committee for allowing me to discuss my experiences as a NASA employee engaged in global warming research, as well as to provide my current views on the state of the science of global warming and climate change.

I have a PhD in Meteorology from the University of Wisconsin-Madison, and have been involved in global warming research for close to twenty years. I have numerous peer reviewed scientific articles dealing with the measurement and interpretation of climate variability and climate change. I am also the U.S. Science Team Leader for the AMSR-E instrument flying on NASA’s Aqua satellite.

1. White House Involvement in the Reporting of Agency Employees’ Work

On the subject of the Administration’s involvement in policy-relevant scientific work performed by government employees in the EPA, NASA, and other agencies, I can provide some perspective based upon my previous experiences as a NASA employee. For example, during the Clinton-Gore Administration I was told what I could and could not say during congressional testimony. Since it was well known that I am skeptical of the view that mankind’s greenhouse gas emissions are mostly responsible for global warming, I assumed that this advice was to help protect Vice President Gore’s agenda on the subject.

This did not particularly bother me, though, since I knew that as an employee of an Executive Branch agency my ultimate boss resided in the White House. To the extent that my work had policy relevance, it seemed entirely appropriate to me that the privilege of working for NASA included a responsibility to abide by direction given by my superiors.

But I eventually tired of the restrictions I had to abide by as a government employee, and in the fall of 2001 I resigned from NASA and accepted my current position as a Principal Research Scientist at the University of Alabama in Huntsville. Despite my resignation from NASA, I continue to serve as Team Leader on the AMSR-E instrument flying on the NASA Aqua satellite, and maintain a good working relationship with other government researchers.

2. Global Warming Science: The Latest Research

Regarding the currently popular theory that mankind is responsible for global warming, I am very pleased to deliver good news from the front lines of climate change research. Our latest research results, which I am about to describe, could have an enormous impact on policy decisions regarding greenhouse gas emissions.

Despite decades of persistent uncertainty over how sensitive the climate system is to increasing concentrations of carbon dioxide from the burning of fossil fuels, we now have new satellite evidence which strongly suggests that the climate system is much less sensitive than is claimed by the U.N.’s Intergovernmental Panel on Climate Change (IPCC).

Another way of saying this is that the real climate system appears to be dominated by “negative feedbacks” — instead of the “positive feedbacks” which are displayed by all twenty computerized climate models utilized by the IPCC. (Feedback parameters larger than 3.3 Watts per square meter per degree Kelvin (Wm-2K-1) indicate negative feedback, while feedback parameters smaller than 3.3 indicate positive feedback.)

If true, an insensitive climate system would mean that we have little to worry about in the way of manmade global warming and associated climate change. And, as we will see, it would also mean that the warming we have experienced in the last 100 years is mostly natural. Of course, if climate change is mostly natural then it is largely out of our control, and is likely to end — if it has not ended already, since satellite-measured global temperatures have not warmed for at least seven years now.

2.1 Theoretical evidence that climate sensitivity has been overestimated

The support for my claim of low climate sensitivity (net negative feedback) for our climate system is two-fold. First, we have a new research article1 in-press in the Journal of Climate which uses a simple climate model to show that previous estimates of the sensitivity of the climate system from satellite data were biased toward the high side by the neglect of natural cloud variability. It turns out that the failure to account for natural, chaotic cloud variability generated internal to the climate system will always lead to the illusion of a climate system which appears more sensitive than it really is.

Significantly, prior to its acceptance for publication, this paper was reviewed by two leading IPCC climate model experts – Piers Forster and Isaac Held– both of whom agreed that we have raised a legitimate issue. Piers Forster, an IPCC report lead author and a leading expert on the estimation of climate sensitivity, even admitted in his review of our paper that other climate modelers need to be made aware of this important issue.

To be fair, in a follow-up communication Piers Forster stated to me his belief that the net effect of the new understanding on climate sensitivity estimates would likely be small. But as we shall see, the latest evidence now suggests otherwise.

2.2 Observational evidence that climate sensitivity has been overestimated

The second line of evidence in support of an insensitive climate system comes from the satellite data themselves. While our work in-press established the existence of an observational bias in estimates of climate sensitivity, it did not address just how large that bias might be.

But in the last several weeks, we have stumbled upon clear and convincing observational evidence of particularly strong negative feedback (low climate sensitivity) from our latest and best satellite instruments. That evidence includes our development of two new methods for extracting the feedback signal from either observational or climate model data, a goal which has been called the “holy grail” of climate research.

The first method separates the true signature of feedback, wherein radiative flux variations are highly correlated to the temperature changes which cause them, from internally-generated radiative forcings, which are uncorrelated to the temperature variations which result from them. It is the latter signal which has been ignored in all previous studies, the neglect of which biases feedback diagnoses in the direction of positive feedback (high climate sensitivity).

Based upon global oceanic climate variations measured by a variety of NASA and NOAA satellites during the period 2000 through 2005 we have found a signature of climate sensitivity so low that it would reduce future global warming projections to below 1 deg. C by the year 2100. As can be seen in Fig. 1, that estimate from satellite data is much less sensitive (a larger diagnosed feedback) than even the least sensitive of the 20 climate models which the IPCC summarizes in its report. It is also consistent with our previously published analysis of feedbacks associated with tropical intraseasonal oscillations3.

Fig. 1. Frequency distributions of feedback parameters (regression slopes) computed from three-month low-pass filtered time series of temperature (from channel 5 of the AMSU instrument flying on the NOAA-15 satellite) and top-of-atmosphere radiative flux variations for 6 years of global oceanic satellite data measured by the CERES instrument flying on NASA’s Terra satellite; and from a 60 year integration of the NCAR-CCSM3.0 climate model forced by 1% per year CO2 increase. Peaks in the frequency distributions indicate the dominant feedback operating. This NCAR model is the least sensitive (greatest feedback parameter value) of all 20 IPCC models.

A second method for extracting the true feedback signal takes advantage of the fact that during natural climate variability, there are varying levels of internally-generated radiative forcings (which are uncorrelated to temperature), versus non-radiative forcings (which are highly correlated to temperature). If the feedbacks estimated for different periods of time involve different levels of correlation, then the “true” feedback can be estimated by extrapolating those results to 100% correlation. This can be seen in Fig. 2, which shows that even previously published4 estimates of positive feedback are, in reality, supportive of negative feedback (feedback parameters greater than 3.3 Wm-2K-1).

Fig. 2. Re-analysis of the satellite-based feedback parameter estimates of Forster and Gregory (2006) showing that they are consistent with negative feedback rather than positive feedback (low climate sensitivity rather than high climate sensitivity).

2.3 Why do climate models produce so much global warming?

The results just presented beg the following question: If the satellite data indicate an insensitive climate system, why do the climate models suggest just the opposite? I believe the answer is due to a misinterpretation of cloud behavior by climate modelers.

The cloud behaviors programmed into climate models (cloud “parameterizations”) are based upon researchers’ interpretation of cause and effect in the real climate system5. When cloud variations in the real climate system have been measured, it has been assumed that the cloud changes were the result of certain processes, which are ultimately tied to surface temperature changes. But since other, chaotic, internally generated mechanisms can also be the cause of cloud changes, the neglect of those processes leads to cloud parameterizations which are inherently biased toward high climate sensitivity.

The reason why the bias occurs only in the direction of high climate sensitivity is this: While surface warming could conceivably cause cloud changes which lead to either positive or negative cloud feedback, causation in the opposite direction (cloud changes causing surface warming) can only work in one direction, which then “looks like” positive feedback. For example, decreasing low cloud cover can only produce warming, not cooling, and when that process is observed in the real climate system and assumed to be a feedback, it will always suggest a positive feedback.

2.4 So, what has caused global warming over the last century?

One necessary result of low climate sensitivity is that the radiative forcing from greenhouse gas emissions in the last century is not nearly enough to explain the upward trend of 0.7 deg. C in the last 100 years. This raises the question of whether there are natural processes at work which have caused most of that warming.

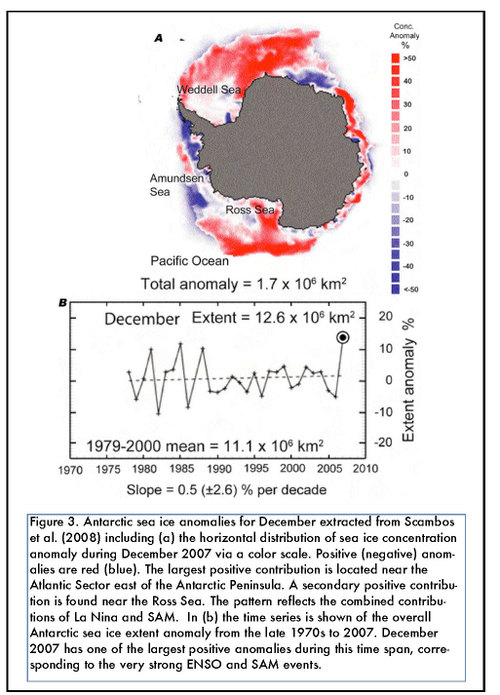

On this issue, it can be shown with a simple climate model that small cloud fluctuations assumed to occur with two modes of natural climate variability — the El Nino/La Nina phenomenon (Southern Oscillation), and the Pacific Decadal Oscillation — can explain 70% of the warming trend since 1900, as well as the nature of that trend: warming until the 1940s, no warming until the 1970s, and resumed warming since then. These results are shown in Fig. 3.

Fig. 3. A simple climate model forced with cloud cover variations assumed to be proportional to a linear combination of the Southern Oscillation Index (SOI) and Pacific Decadal Oscillation (PDO) index. The heat flux anomalies in (a), which then result in the modeled temperature response in (b), are assumed to be distributed over the top 27% of the global ocean (1,000 meters), and weak negative feedback has been assumed (4 W m-2 K-1).

While this is not necessarily being presented as the only explanation for most of the warming in the last century, it does illustrate that there are potential explanations for recent warming other that just manmade greenhouse gas emissions. Significantly, this is an issue on which the IPCC has remained almost entirely silent. There has been virtually no published work on the possible role of internal climate variations in the warming of the last century.

3. Policy Implications

Obviously, what I am claiming today is of great importance to the global warming debate and related policy decisions, and it will surely be controversial. These results are not totally unprecedented, though, as other recently published research6 has also led to the conclusion that the real climate system does not exhibit net positive feedback.

While it will take some time for the research community to digest this new information, it must be mentioned that new research contradicting the latest IPCC report is entirely consistent with the normal course of scientific progress. I predict that in the coming years, there will be a growing realization among the global warming research community that most of the climate change we have observed is natural, and that mankind’s role is relatively minor.

While other researchers need to further explore and validate my claims, I am heartened by the fact that my recent presentation of these results to an audience of approximately 40 weather and climate researchers at the University of Colorado in Boulder last week (on July 17, 2008 ) led to no substantial objections to either the data I presented, nor to my interpretation of those data.

And, curiously, despite its importance to climate modeling activities, no one from Dr. Kevin Trenberth’s facility, the National Center for Atmospheric Research (NCAR), bothered to drive four miles down the road to attend my seminar, even though it was advertised at NCAR.

I hope that the Committee realizes that, if true, these new results mean that humanity will be largely spared the negative consequences of human-induced climate change. This would be good news that should be celebrated — not attacked and maligned.

And given that virtually no research into possible natural explanations for global warming has been performed, it is time for scientific objectivity and integrity to be restored to the field of global warming research. This Committee could, at a minimum, make a statement that encourages that goal.

REFERENCES

1. Spencer, R.W., and W.D. Braswell, 2008: Potential biases in cloud feedback diagnosis:

A simple model demonstration. J. Climate, in press.

2. Allen, M.R., and D.J. Frame, 2007: Call off the quest. Science, 318, 582.

3. Spencer, R.W., W. D. Braswell, J. R. Christy, and J. Hnilo, 2007: Cloud and radiation

budget changes associated with tropical intraseasonal oscillations. Geophys. Res.

Lett., 34, L15707, doi:10.1029/2007GL029698.

4. Forster, P. M., and J. M. Gregory, 2006: The climate sensitivity and its components

diagnosed from Earth Radiation Budget data. J. Climate, 19, 39-52.

5. Stephens, G. L., 2005: Clouds feedbacks in the climate system: A critical review. J.

Climate, 18, 237-273.

6. Schwartz, S. E., 2007: Heat capacity, time constant, and sensitivity of the Earth’s

climate system. J. Geophys. Res., 112, D24S05, doi:10.1029/2007JD008746.