I had an epiphany the other day: While skeptics and catastrophists debate the impact of CO2 on future temperatures, to a large extent we are arguing about the wrong thing. Nearly everyone on both sides of the debate agree that, absent of feedback, the effect of a doubling of CO2 from pre-industrial concentrations (e.g. 280 ppm to 560 ppm, where we are at about 385ppm today) is to warm the Earth by about 1°C ± 0.2°C. What we really should be arguing about is feedback.

In the IPCC Third Assessment, which is as good as any proxy for the consensus catasrophist position, it is stated:

If the amount of carbon dioxide were doubled instantaneously, with everything else remaining the same, the outgoing infrared radiation would be reduced by about 4 Wm-2. In other words, the radiative forcing corresponding to a doubling of the CO2 concentration would be 4 Wm-2. To counteract this imbalance, the temperature of the surface-troposphere system would have to increase by 1.2°C (with an accuracy of ±10%), in the absence of other changes.

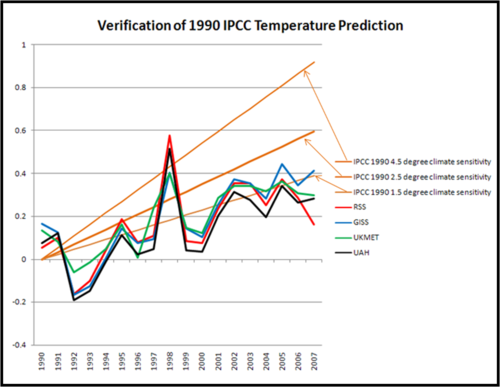

Skeptics would argue that the 1.2°C is (predictably) at the high end of the band, but in the ballpark none-the-less. The IPCC also points out that there is a diminishing return relationship between CO2 and temperature, such that each increment of CO2 has less effect on temperature than the last. Skeptics also agree to this. What this means in practice is that though the world, currently at 385ppm CO2, is only about 38% of the way to a doubling of CO2 from pre-industrial times, we should have seen about half of the temperature rise for a doubling, or if the IPCC is correct, about 0.6°C (again absent feedback). This means that as CO2 concentrations rise from today’s 385 to 560 toward the end of this century, we might expect another 0.6°C warming.

This is nothing! We probably would not have noticed the last 0.6°C if we had not been told it happened, and another 0.6°C would be trivial to manage. So, without feedback, even by the catastrophist estimates at the IPCC, warming from CO2 over the next century will not rise about nuisance level. Only massive amounts of positive feedback, as assumed by the IPCC, can turn this 0.6°C into a scary number. In the IPCC’s words:

To counteract this imbalance, the temperature of the surface-troposphere system would have to increase by 1.2°C (with an accuracy of ±10%), in the absence of other changes. In reality, due to feedbacks, the response of the climate system is much more complex. It is believed that the overall effect of the feedbacks amplifies the temperature increase to 1.5 to 4.5°C. A significant part of this uncertainty range arises from our limited knowledge of clouds and their interactions with radiation. …

So, this means that debate about whether CO2 is a greenhouse gas is close to meaningless. The real debate should be, how much feedback can we expect and in what quantities? (By the way, have you ever heard the MSM mention the word "feedback" even once?) And it is here that the scientific "consensus" really breaks down. There is no good evidence that feedback numbers are as high as those plugged into climate models, or even that they are positive. This quick analysis demonstrates pretty conclusively that net feedback is probably pretty close to zero. I won’t go much more into feedback here, but suffice it to say that climate scientists are way out on a thin branch in assuming that a long-term stable process like climate is dominated by massive amounts of positive feedback. I discuss and explain feedback in much more detail here and here.

Update: Thanks to Steve McIntyre for digging the above quotes out of the Third Assessment Report. I have read the Fourth report cover to cover and could not find a single simple statement making this breakdown of warming between CO2 in isolation and CO2 with feedbacks. The numbers and science has not changed, but they seem to want to bury this distinction, probably because the science behind the feedback analysis is so weak.